StoryMem

Multi-shot Long Video Storytelling with Memory

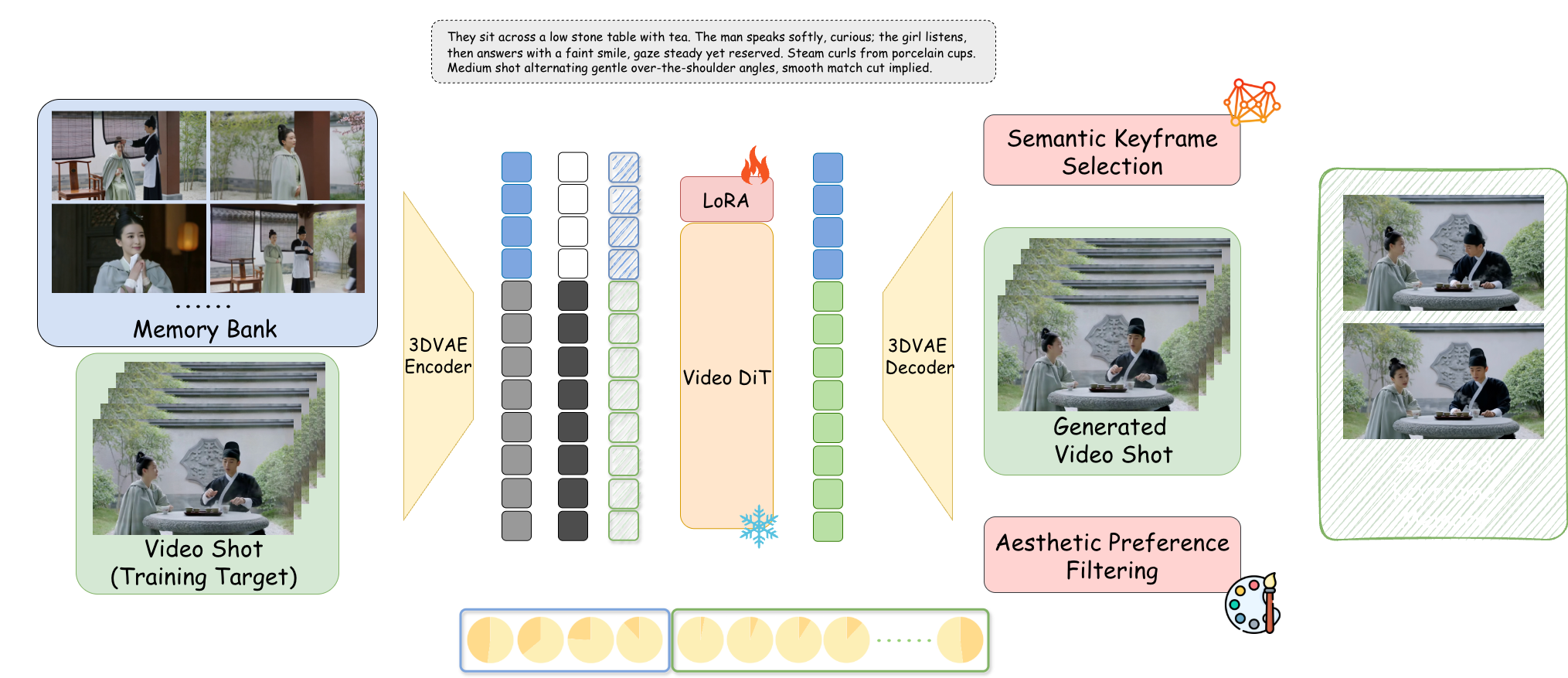

From "random generation" to "director thinking". Through Explicit Visual Memory Banks and Negative RoPE Shift technology, StoryMem solves the "forgetting" problem in multi-shot storytelling, achieving cinematic cross-shot consistency.

Core Breakthroughs & Evolution

The "Third Way" of Video Generation Technology

Core Pain Point: Multi-shot "Forgetting"

Existing DiT models treat each generation as an independent random process. When cutting from "wide shot" to "close-up", pixel-level features (hairstyle, clothing texture) often mutate, breaking immersion.

Explicit Memory Bank

Beyond high-compute Joint Modeling and simple Auto-regression. StoryMem establishes a dynamic memory module independent of the generation process, maintaining narrative context like human working memory.

Negative RoPE Shift

Singularity: By assigning negative time indices to memory frames, it creates an attention mechanism that is "semantically connected but spatiotemporally disconnected". Perfectly simulates film Hard Cuts, retaining identity while resetting motion.

M2V Architecture Deep Dive

Memory Bank Construction

Based on Wan2.2-I2V + LoRA fine-tuning. Uses intelligent filtering to keep only keyframes from the last 10 active shots, simulating human "working memory" to avoid VRAM exhaustion.

Semantic Keyframe Selection

Introduces CLIP model to calculate cosine similarity. Stores only frames that best reflect current script descriptions (e.g., "angry expression"), discarding blurry or blinking intermediate frames.

Aesthetic Preference Filtering

Integrates HPSv3 scoring model as "AI Art Director". Filters out collapsed frames in real-time, ensuring only high-aesthetic quality material remains in memory.

Latent Space Concatenation

Memory frames are compressed into Latents via 3D VAE, concatenated with current noise vectors in channel dimension, and injected via Rank=128 LoRA adapter.

ST-Bench Authoritative Evaluation

Quantitative assessment based on 300 multi-shot narrative prompts

Based on ViCLIP cosine similarity, accurately quantifying protagonist identity retention.

LAION aesthetic predictor score, proving memory module did not degrade artistic standards.

Memory mechanism did not interfere with model understanding of new instructions, accurately responding to script changes.

Script-to-Video Storytelling

JSON structured script input, control shots like a director

Dream of Red Mansions: Daiyu Enters

AtmosphericComparison & Positioning

Compared to models like Open-Sora focused on physics simulation, StoryMem focuses on Cinematic Narrative Logic.

❌ Random Process: Character appearance mutates with shot changes, model cannot maintain consistent image.

✅ Memory Injection: Even under different angles and lighting, structural details (eyes, color) remain highly consistent.

vs. Sora / Open-Sora

Sora tends to generate single continuous long videos. StoryMem pursues Narrative Sequences composed of 5-10s shots, fitting professional film editing pipelines.

vs. AnimateDiff

AnimateDiff excels at stylized loops but creates morphing transitions during "Hard Cuts". StoryMem solves this perfectly via Negative RoPE.

vs. IP-Adapter

IP-Adapter relies on single reference image, prone to collapse in complex dynamics. StoryMem's dynamic memory captures multi-angle features.

StoryMem proves that "Memory" is key to advanced intelligent storytelling. Although currently limited by base model quality (e.g., hand details) and VRAM costs (24GB recommended), it opens the door to "One-Person Film Studios".

Future Outlook: Introducing Audio Memory Banks for voice consistency; exploring infinite generation possibilities for Interactive Movies & Games with real-time rendering.

📄 Read Full Paper (ArXiv)