Abstract

While modern AI has made great strides in generating audio from video,

creating high-fidelity sound that truly matches the nuance of visual

content remains a major hurdle. Professional sound design requires

complex reasoning about visual cues, acoustics, and timing—a skill that

has been difficult to replicate in AI.

This paper introduces ThinkSound, a groundbreaking

framework that teaches AI to "think" like a sound designer. By using

Chain-of-Thought (CoT) reasoning, ThinkSound breaks down the complex

task of audio generation into logical, manageable steps. This allows for

not just creating sound from scratch but also for interactive,

object-focused editing and refinement using simple natural language

commands. To power this, we also present AudioCoT, a

first-of-its-kind dataset designed to train models on this reasoning

process. Our experiments show that ThinkSound sets a new

state-of-the-art in both audio quality and relevance, performing

exceptionally well even on complex, out-of-distribution movie scenes.

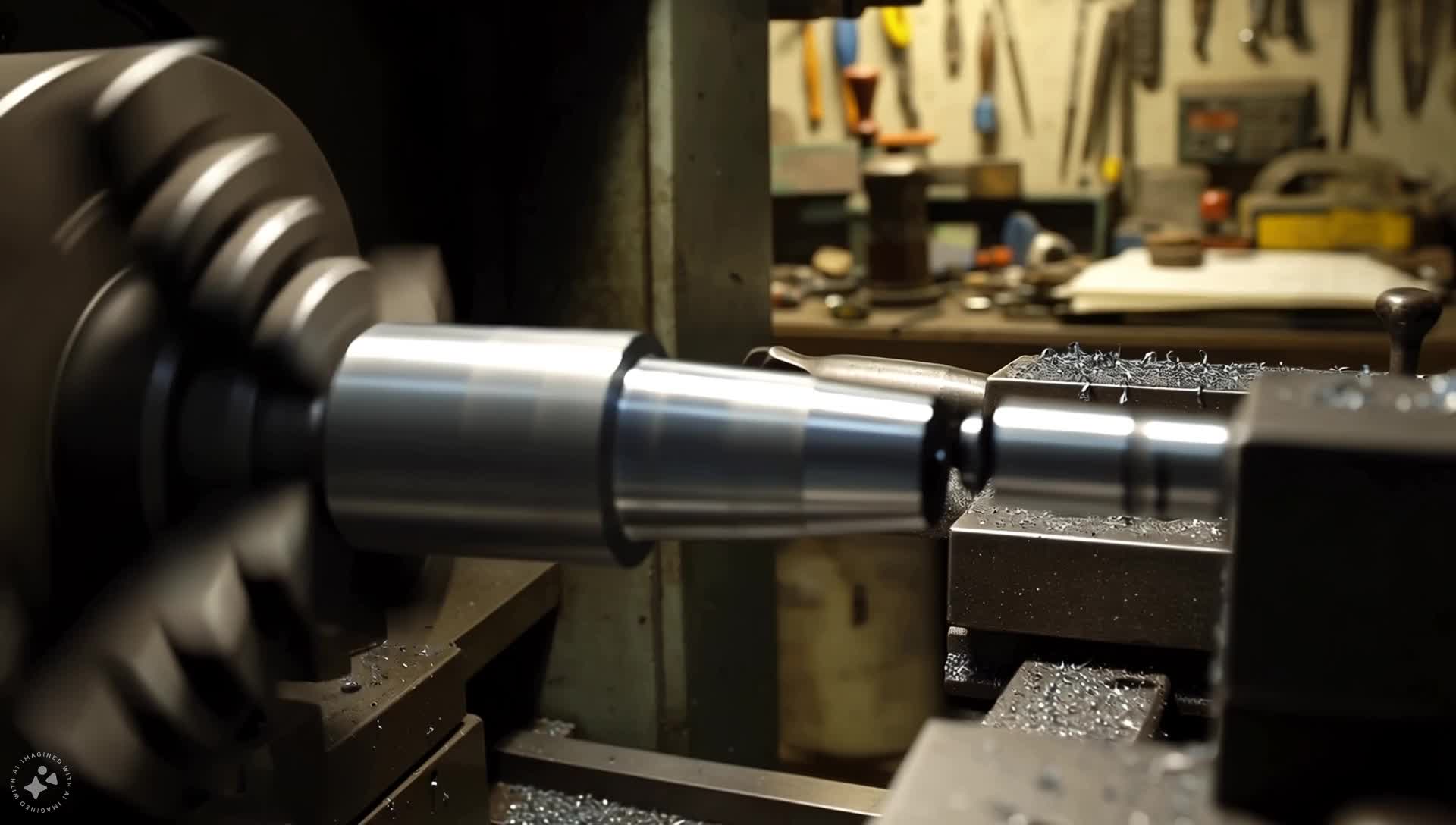

Synergy with Video Generation Models

ThinkSound seamlessly adds rich, synchronized soundscapes to videos created by leading generative models. The videos below were generated by their respective models; all audio was created by ThinkSound.

Veo + ThinkSound

Sora + ThinkSound

MovieGen + ThinkSound

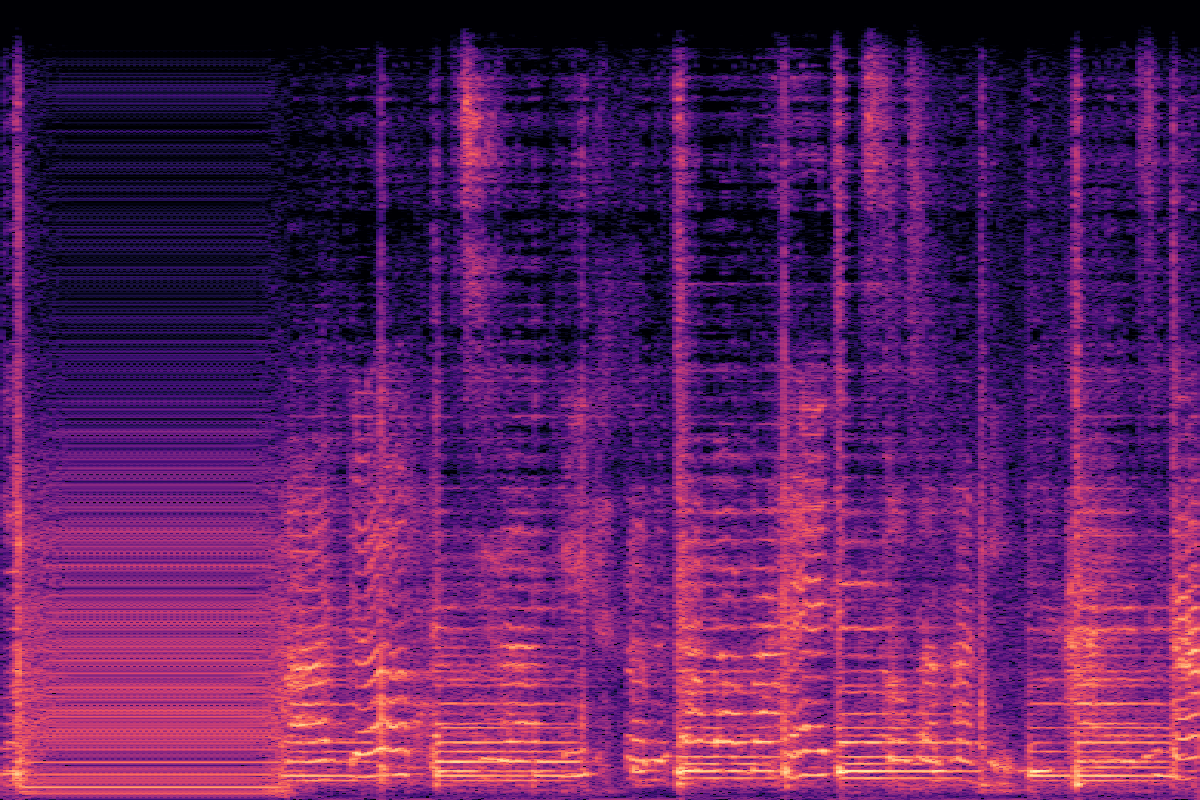

V2A Comparisons on VGGSound (In-distribution)

Click on any thumbnail to load and play the video, comparing ThinkSound to other models.

| CoT | Ground Truth | ThinkSound | MMAudio | See&Hear |

|---|---|---|---|---|

| Playing Tennis Generate sounds of tennis hitting a racket and the ball bouncing... |  |  |  |  |

| Printer Printing Generate a continuous printer printing sound with periodic beeps... |  |  |  |  |

| Ripping Paper Start with a subtle tearing sound of paper being ripped... |  |  |  |  |

| Using Sewing Machines Generate ambient sewing room sounds with consistent sewing machine hum... |  |  |  |  |

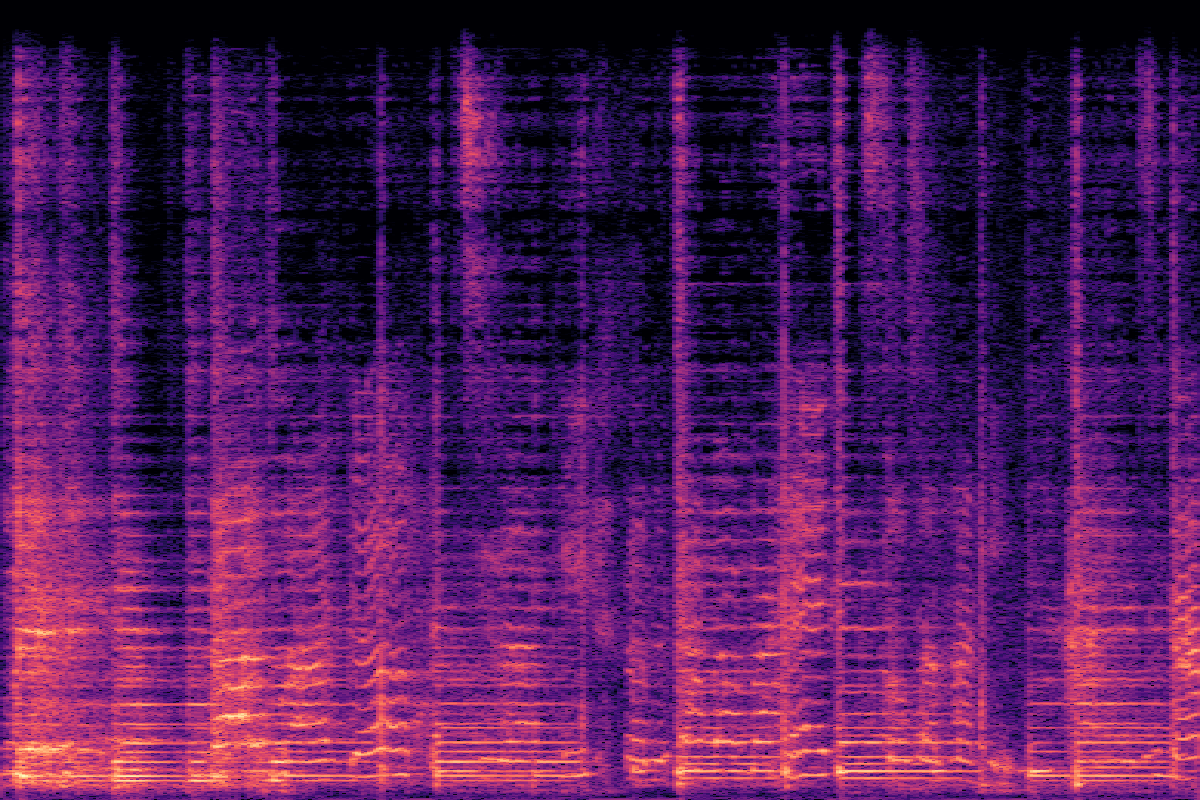

V2A Comparisons on MovieGen Audio (Out-of-Distribution)

See how ThinkSound performs on challenging, out-of-distribution movie clips.

| CoT | ThinkSound | Movie Gen Audio | MMAudio |

|---|---|---|---|

| Gentle Sucking Sounds Soft, steady background of light pacifier suckling... |  |  |  |

| Harmonious Strings Acoustic guitar strings humming and buzzing... |  |  |  |

| Old TV Humming Ambient background noise with faint static and white noise... |  |  |  |

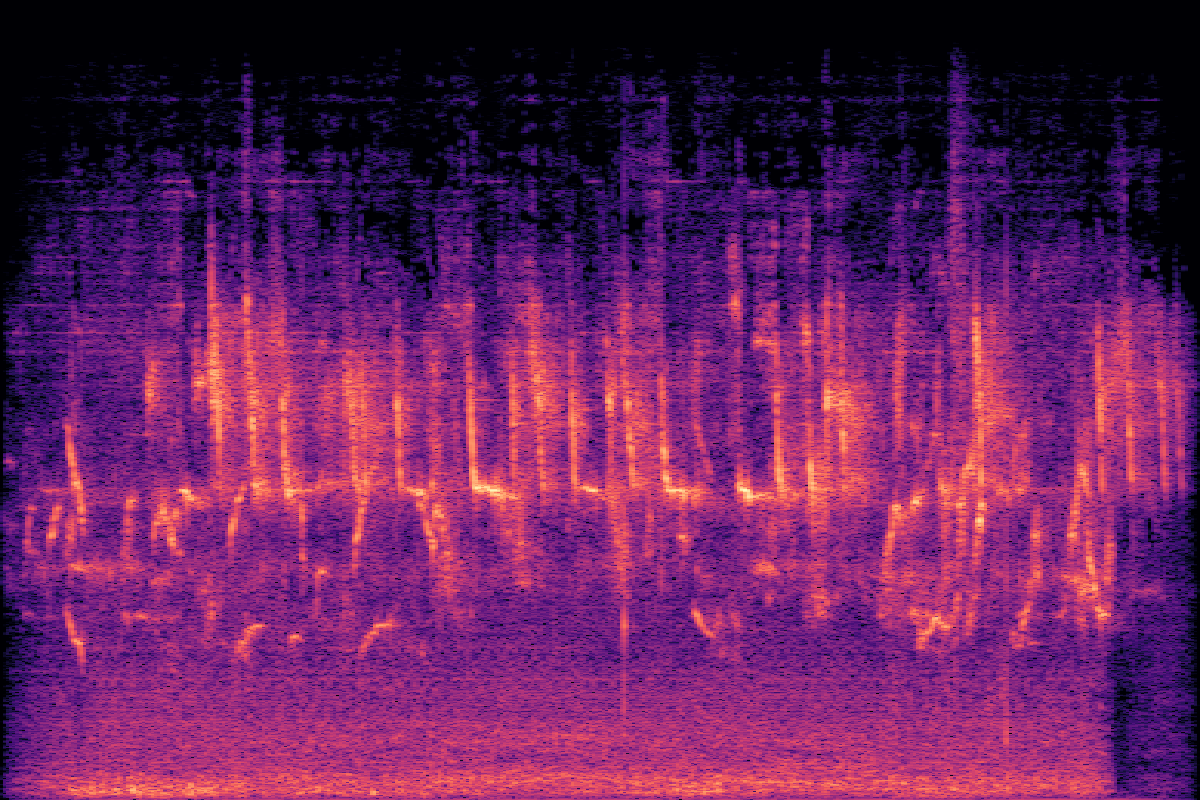

| Intense Thunder A low wind hum and occasional crackles add to the stormy atmosphere... |  |  |  |

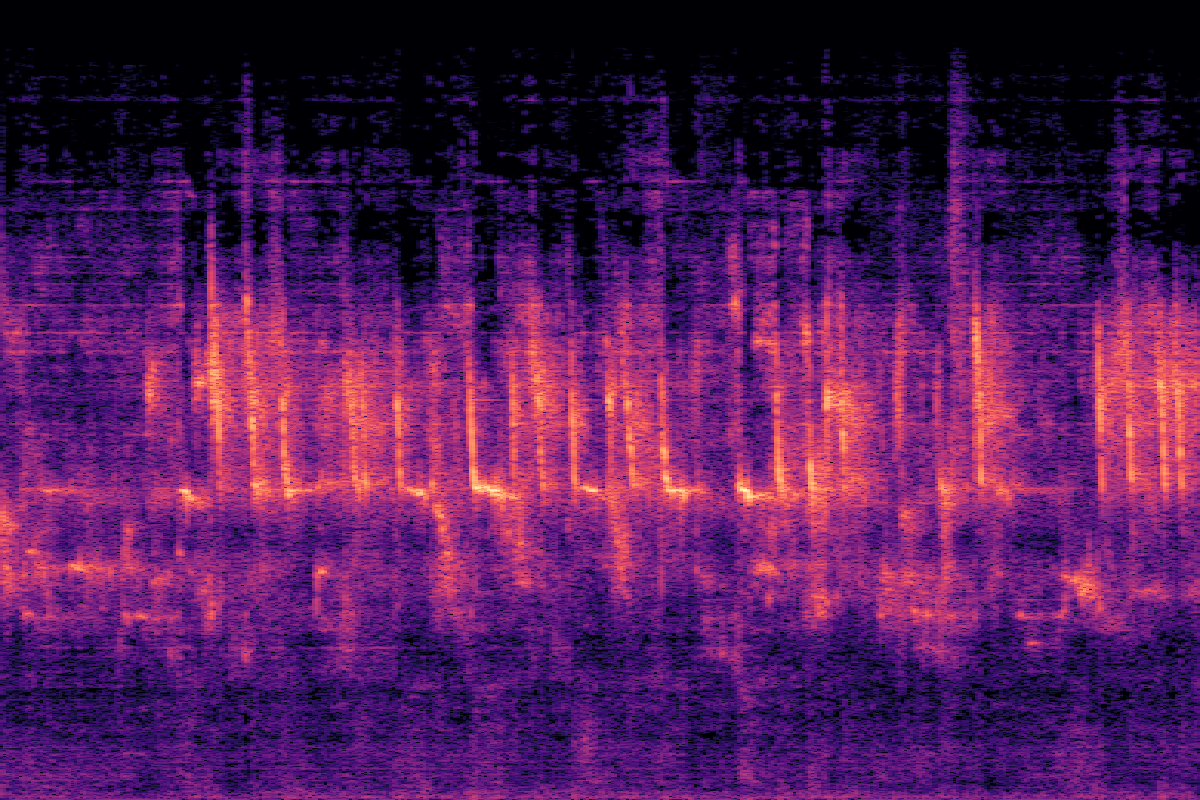

Interactive Step-by-Step Foley Creation

V2A Gen → Object-Focus → Audio Inpainting

V2A Gen → Object-Focus → Audio Editing

Experiments

Main Results on VGGSound

ThinkSound outperforms all baselines across most objective metrics and all subjective metrics, achieving substantial improvements in audio quality and semantic alignment.

| Method | Objective Metrics | Subjective Metrics | Efficiency | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| FD ↓ | KLPaSST ↓ | KLPaNNs ↓ | DeSync ↓ | CLAPcap ↑ | CLAPCoT ↑ | MOS-Q ↑ | MOS-A ↑ | Params | Time(s) ↓ | |

| GT | - | - | - | 0.55 | 0.28 | 0.45 | 4.37±0.21 | 4.56±0.19 | - | - |

| See&Hear | 118.95 | 2.26 | 2.30 | 1.20 | 0.32 | 0.35 | 2.75±1.08 | 2.87±0.99 | 415M | 19.42 |

| V-AURA† | 46.99 | 2.23 | 1.83 | 0.65 | 0.23 | 0.37 | 3.42±1.03 | 3.20±1.17 | 695M | 14.00 |

| FoleyCrafter | 39.15 | 2.06 | 1.89 | 1.21 | 0.41 | 0.34 | 3.08±1.21 | 2.63±0.88 | 1.20B | 3.84 |

| Frieren† | 74.96 | 2.55 | 2.64 | 1.00 | 0.37 | 0.34 | 3.27±1.11 | 2.95±1.09 | 159M | - |

| V2A-Mapper† | 48.10 | 2.50 | 2.34 | 1.23 | 0.38 | 0.32 | 3.31±1.02 | 3.16±1.04 | 229M | - |

| MMAudio | 43.26 | 1.65 | 1.40 | 0.44 | 0.31 | 0.40 | 3.84±0.89 | 3.97±0.82 | 1.03B | 3.01 |

| ThinkSound | 34.56 | 1.52 | 1.32 | 0.46 | 0.33 | 0.46 | 4.02±0.73 | 4.18±0.79 | 1.30B | 1.07 |

| w/o CoT Reasoning | 39.84 | 1.59 | 1.40 | 0.48 | 0.29 | 0.41 | 3.91±0.83 | 4.04±0.75 | 1.30B | 0.98 |

Ablation Studies

We investigated the contribution of each component to validate the effectiveness of our design choices, focusing on text encoding and multi-modal integration.

Text Encoding Strategies

| Method | FD ↓ | KLPaSST ↓ | KLPaNNs ↓ | DeSync ↓ | CLAP ↑ |

|---|---|---|---|---|---|

| CLIP | 39.84 | 1.59 | 1.40 | 0.48 | 0.41 |

| T5 (CoT) | 37.65 | 1.54 | 1.35 | 0.46 | 0.44 |

| CLIP + T5 | 34.56 | 1.52 | 1.32 | 0.46 | 0.46 |

Multi-Modal Integration

| Integration | FD ↓ | KLPaSST ↓ | KLPaNNs ↓ | DeSync ↓ | CLAP ↑ |

|---|---|---|---|---|---|

| audio only | 37.13 | 1.58 | 1.37 | 0.50 | 0.43 |

| linear video | 38.96 | 1.58 | 1.38 | 0.46 | 0.45 |

| gated video | 34.56 | 1.52 | 1.32 | 0.46 | 0.46 |

Impact of Model Size

| Size | FD ↓ | KLPaSST ↓ | KLPaNNs ↓ | DeSync ↓ | CLAPCoT ↑ |

|---|---|---|---|---|---|

| Small | 40.80 | 1.64 | 1.38 | 0.46 | 0.41 |

| Medium | 36.80 | 1.56 | 1.34 | 0.46 | 0.44 |

| Large | 34.56 | 1.52 | 1.32 | 0.46 | 0.46 |

Frequently Asked Questions

ThinkSound is an advanced AI framework designed to generate and edit audio for videos. Unlike traditional models, it uses a reasoning process called Chain-of-Thought (CoT) to understand the context of a video and create highly relevant, high-quality sound, much like a professional sound designer would.

Chain-of-Thought allows the model to break down a complex task (like "create a soundtrack for this video") into smaller, logical steps. For example, it might first identify the main objects and actions, then reason about the environment's acoustics, and finally decide on the appropriate sounds and their timing. This step-by-step process leads to more accurate and contextually aware audio generation.

Three main things: 1) Its use of CoT reasoning for more intelligent sound creation. 2) Its interactivity, allowing users to edit audio, focus on specific objects, and refine the sound using natural language. 3) It's powered by AudioCoT, a unique dataset built specifically for training this kind of reasoning-based audio generation.

Yes! We have provided an interactive demo on Hugging Face Spaces, linked at the top of this page. You can also explore the source code on GitHub to run the model yourself.