SEEDANCE 2.0

Native 2K Resolution · Multi-shot Narrative · Audio-Visual Sync

Redefining the "Director-Level" experience in AI video generation

Core Breakthroughs

Multi-shot Coherent Narrative

No more single 3-second clips. Seedance 2.0 understands complex script logic, generating multi-shot sequences with long shots, close-ups, and camera movements, maintaining high consistency.

Native Audio-Visual Sync

Sound exists the moment video is generated. Supports precise lip-sync for multiple languages and generates SFX/BGM simultaneously. "What you see is what you hear".

Multimodal Reference Control

Supports 0-5 reference images for precise control over character appearance, clothing style, and scene composition. Also supports start/end frame interpolation.

2K Cinematic Quality

Native 2K output with rich details and realistic lighting. Rebuilt physics engine drastically improves motion rationality, eliminating "liquefaction" and "floating" artifacts.

Instant Draft Mode

40 seconds? No, just seconds. Unique Draft preview mode for low-cost rapid verification of storyboards and ideas before high-res rendering.

Long Video Completion & Extension

Not just generation from scratch, but intelligent completion and infinite extension of existing videos. Fix missing segments or let the story continue forever.

Featured Generations

Seedance 2.0 showcases master-level camera language and lighting texture.

Click cover to play 2K original

Beyond Generation

Full-Modal Architecture

Seedance 2.0 adopts a Unified Multimodal Joint Generation Architecture. Through comprehensive reconstruction of the underlying architecture, data pipeline, and post-training, it achieves precise synergy between visual and auditory streams.

MMDiT Architecture

Facilitates deep cross-modal interaction, ensuring precise temporal synchronization and semantic consistency between visual and auditory streams.

Multi-stage Data Pipeline

Prioritizes audio-video coherence, motion expressiveness, and curriculum-based data scheduling.

RLHF Reinforcement Learning

RL algorithms customized for audio-video contexts, enhancing motion quality, visual aesthetics, and audio fidelity.

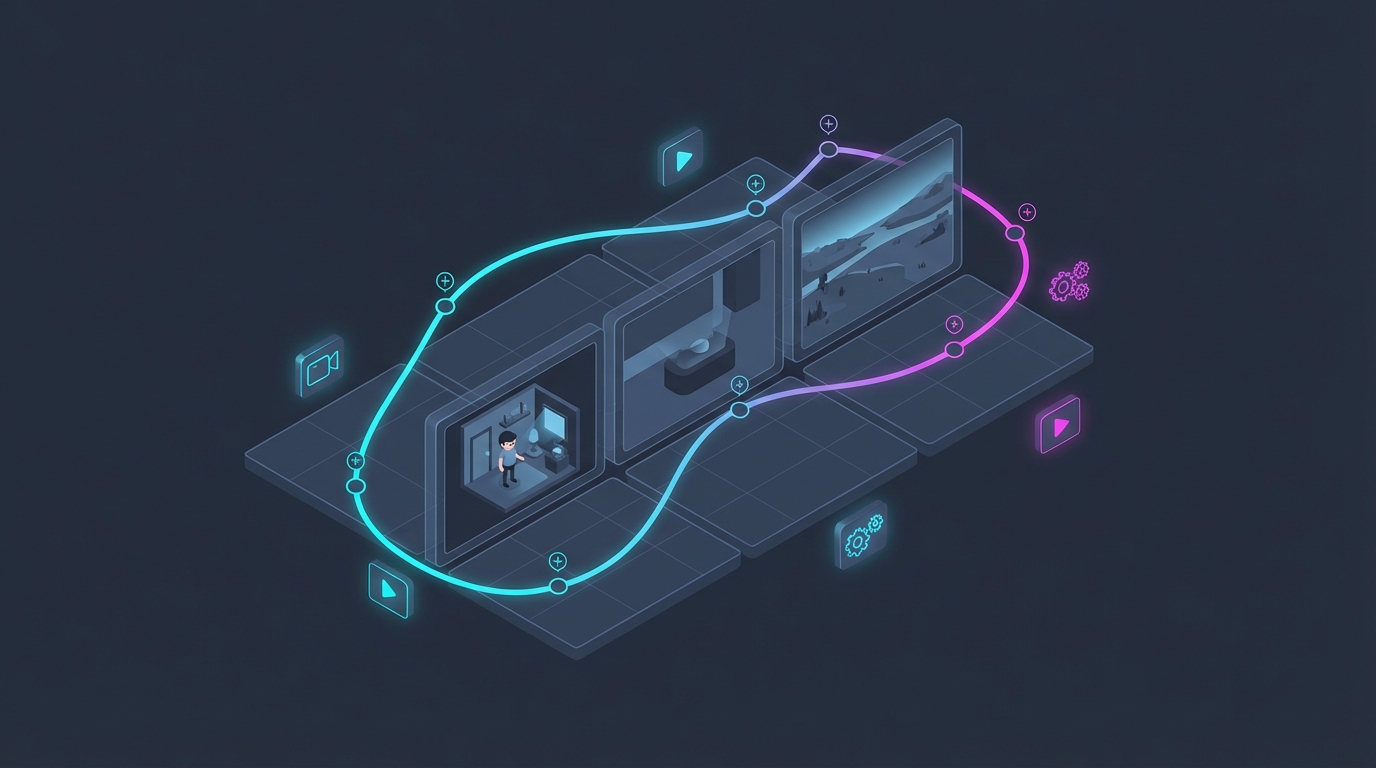

How to Start Creating

Select Mode & Input

Choose Text-to-Video or Image-to-Video. Enter your creative prompt or upload 1-5 reference images as character/style guides.

Draft Quick Verification

Check "Draft Mode" to generate low-res preview videos in seconds. Confirm storyboards, camera movement, and action meet expectations.

Render 2K Final

Once satisfied with Draft, one-click to high-res render. Seedance 2.0 will generate the final 2K video with native sound effects.

Ready to Direct?

Seedance 2.0 is now live on Jimeng and Volcano Engine.

No professional equipment needed, your creativity is the only threshold.